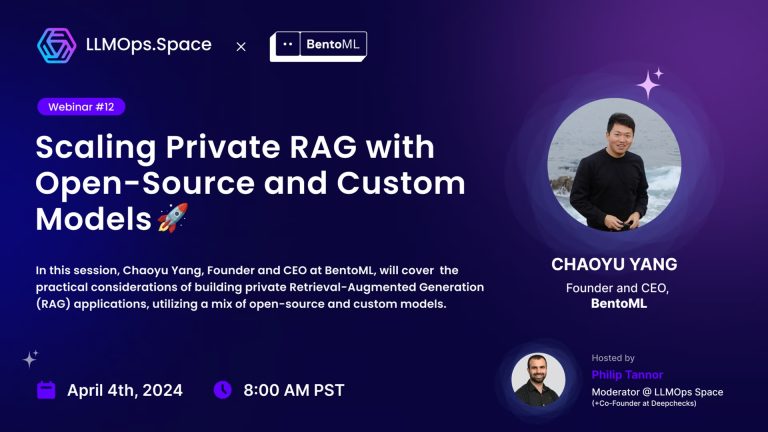

In this session, Chaoyu Yang, Founder and CEO at BentoML, talked about the practical considerations of building private Retrieval-Augmented Generation (RAG) applications, utilizing a mix of open source and custom LLMs.

He also talked about OpenLLM (https://github.com/bentoml/OpenLLM) and how it can help with LLM Deployments.

Topics that were covered:

✅ The benefits of self-hosting open source LLMs or embedding models for RAG.

✅ Common best practices in optimizing inference performance for RAG.

✅ BentoML for building RAG as a service, seamlessly chaining language models with various components, including text and multi-modal embedding, OCR pipelines, semantic chunking, classification models, and reranking models.