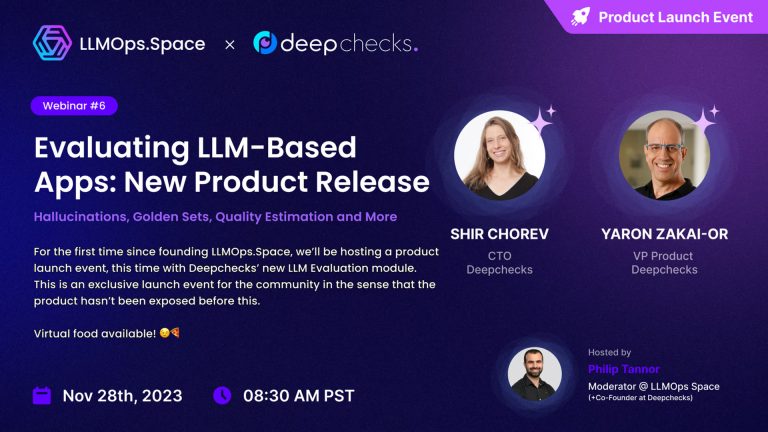

In this session, Shir Chorev, CTO at Deepchecks, and Yaron, VP Product at Deepchecks discussed LLM hallucinations, evaluation methodologies, golden sets, and gave a live demonstration of the new Deepchecks LLM evaluation module.

Deepchecks LLM Evaluation:

https://deepchecks.com/solutions/llm-evaluation/

Topics that were covered:

✅ Hallucinations: Cases when the model generates outputs that aren’t grounded in the context given to the LLM. We’ll discuss this well-known problem as well as a robust approach towards solving it.

✅ Evaluation Methodologies: We’ll explore various methodologies for evaluating LLMs, including both automated and manual techniques. We’ll also talk about structuring the golden set that will be used for benchmarking the LLM’s performance, as well as why it’s important.

✅ Deepchecks LLM Evaluation: A live demonstration of Deepcheck’s new LLM evaluation module, and the main highlight of this session.